Problem Statement

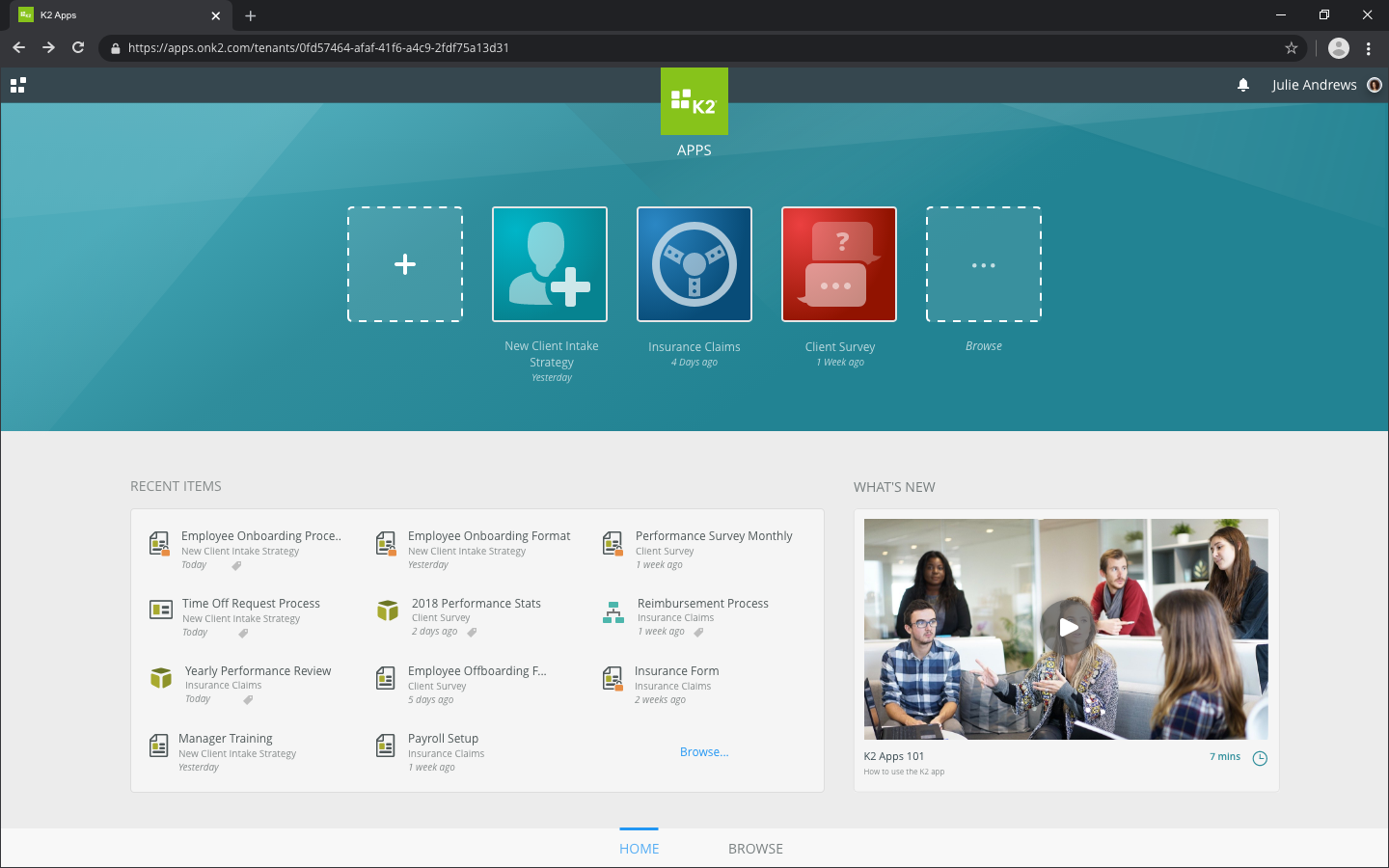

I was tasked with creating initial baseline insight for the MVP release of our. new App Designer. Companies use K2 to build business process automation (BPA) applications that can take several months to create within large organizations due to complexity of task, difficulty onboarding, and large team collaboration. Our new App Designer is our approach to simplifying the building of BPA applications. Currently, we have little insight into how well our previous products have improved building BPA applications for users. We needed to create a centralized source for insight or data that we can evaluate overtime to help answer two primary questions.

- How are we currently doing?

- Are we headed in the right direction?

My Role

While my title was UX/UI Designer, I took on a UX Researcher role to discover and design a concept that would consolidate each design tool into a single environment to help solve the above user problems. My role in this project was primary researcher. I worked with the UX lead and the Product Manager to develop the goals and hypothesis. I was responsible for gathering participants, conducting research, analyzing results, and reporting.

Tools Used

- MS Excel: For analyzing likert scale and point system for errors-made, task completion.

- Lucidchart: For affinity mapping.

- MS Teams: For recording remote user testing

- Adobe XD: For prototyping and presenting scenario slides.

- Pen & paper: For note taking.

Goals & Hypothesis

I thought the best approach for creating some sort of baseline metrics was to use three areas of Efficiency, Effectiveness, and Satisfaction outlined in the ISO usability standards as our goals.

I worked with the PM and UX Lead to determine a list of hypothesis that we can use to measure each.

- Users will know how to find and edit items someone else created.

- Users will have the features they need to organize multiple items

- Users can do basic actions (edit/delete/rename/copy/search)

- Templates will provide all the features a user needs to package and deploy.

- App Designer will reduce the time it takes to build an App currently.

- Users have access to all the features they need to publish an app.

- Users will want to update their existing environments to App Designer.

I observed a series of tasks that I could measure to prove/disprove the above hypothesis. These tasks are outline in the Research Methods section.

Research Methods

Remote Moderated Usability Study was conducted to carry-out a series of tests.In addition to the usability study, we also provided the product team with the following.

- Benchmark metrics

- System Usability Scaling (S.U.S.)

- Customer Journey Mapping

Timeframe

1 Sprint (2 weeks).

Personas

Users

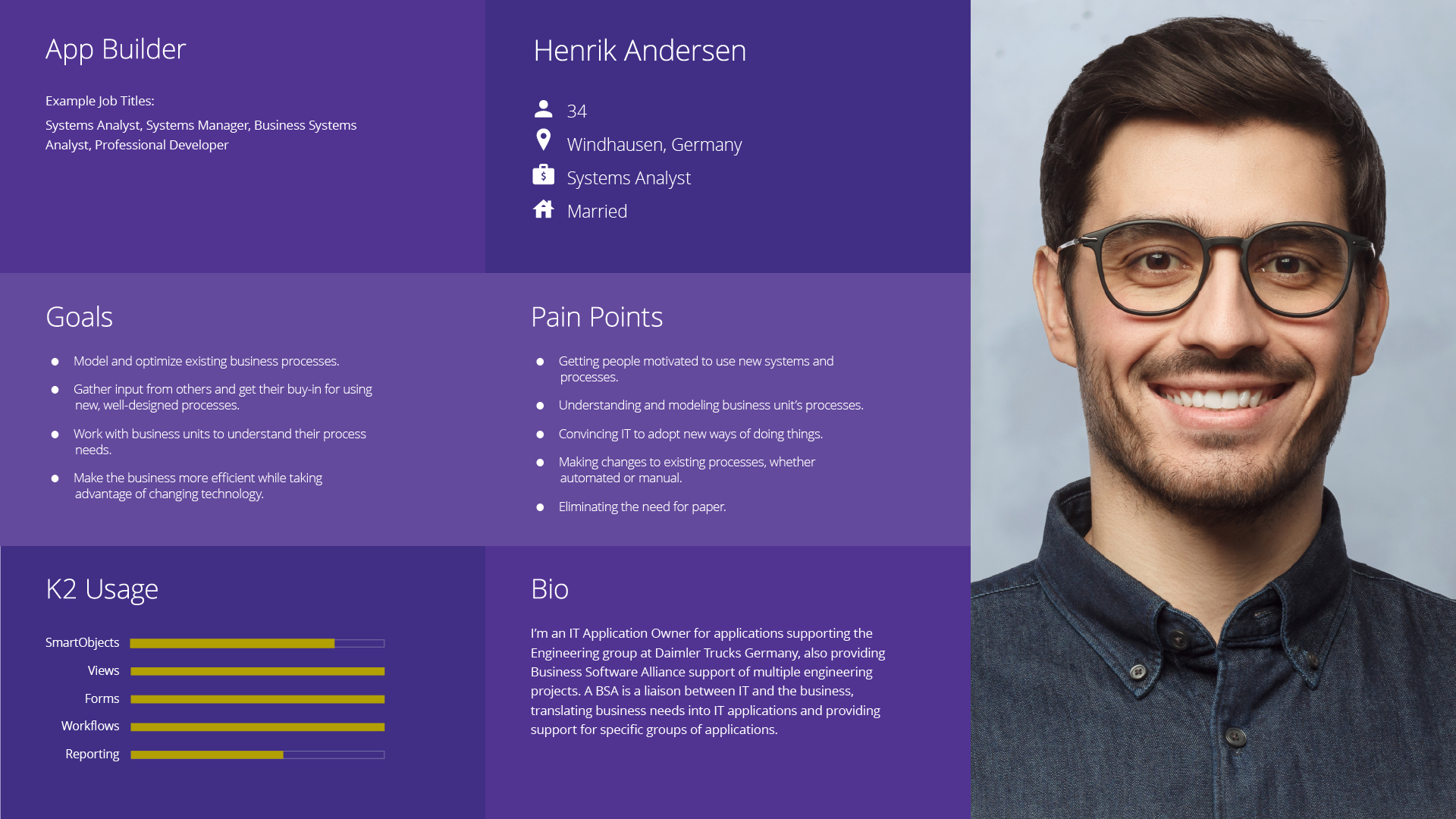

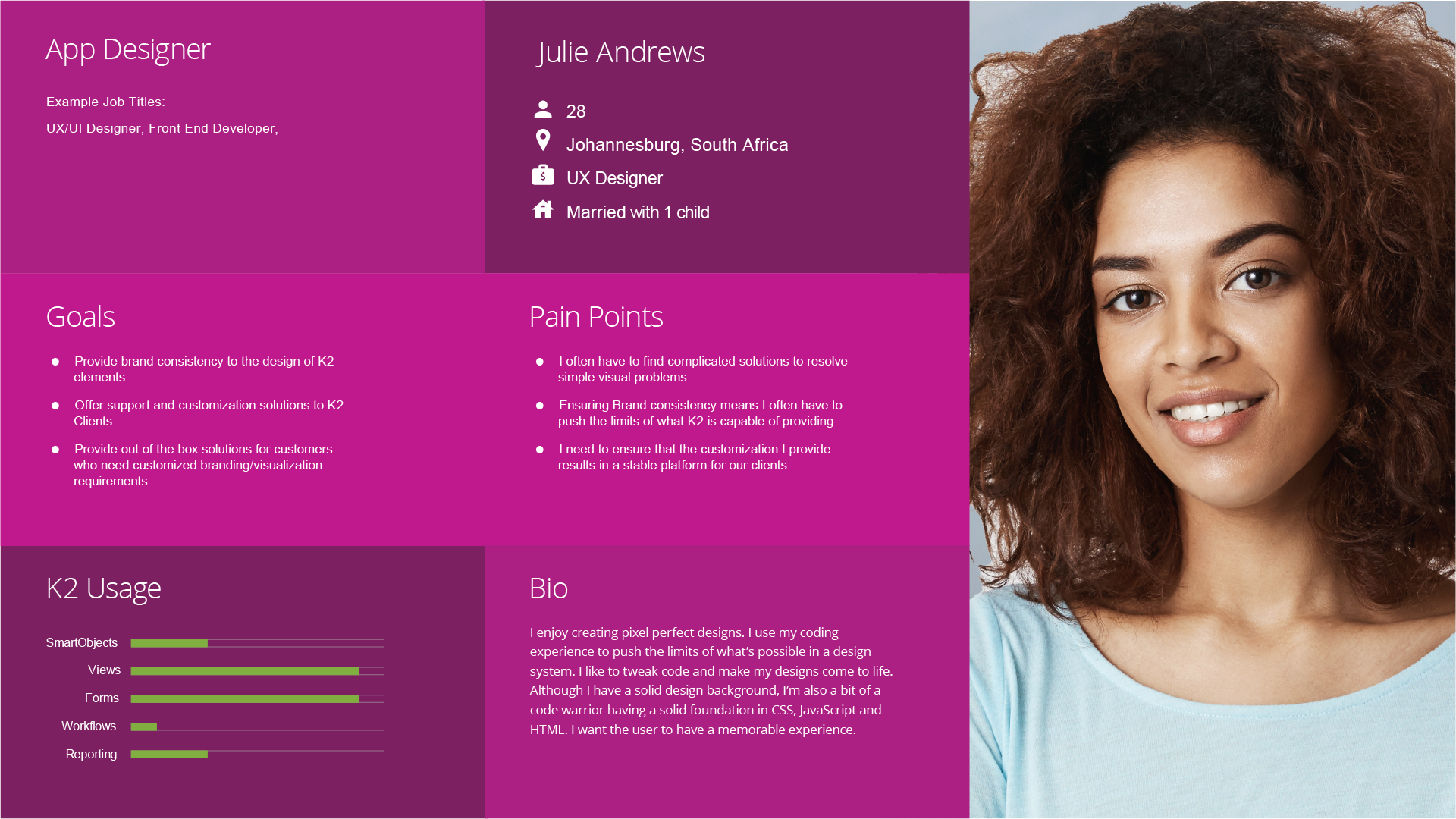

As a Saas enterprise, K2 has approximately 2.3 million users around the world. Since our product is being used within various departments for large organizations, we have a wide variety of users. Anyone from IT Admins, developers, Business Analysts, and designers use our product. Because of such a large scale audience, we had to narrow our personas down based on how people use our product. The two primary users that would use App Designer on a daily basis are our App Designer and App Builder personas.

As a global company, I wanted to test users from our primary markets.

- 5 participants from AMER (Seattle, Florida, New York, Colorado, California)

- 2 Participants from APAC (Japan, Australia)

- 3 Participants from EMEA (S. Africa)

Screener Q's

I worked with the Product Manager to create a screener that he could send out to an audience of our most recent User Conference where we previewed Apps Designer for the first time. The purpose of the screener was to make sure I was testing the right persona and that each participant had the ability and was willing to participate in testing. The screener consisted of a list of questions to understand the following.

- Occupation

- Experience level with K2 product

- Team size

- Industry

- Able to read and understand English language

- Internet connectivity (speed)

- Agree to Terms & Conditions

Session Overview

Preamble (5 mins)

A started each session with a brief introduction of myself and a few ice breaker questions to get to know the participant and help them relax. I then gave a brief overview of how the 1 hour session will go and asked if they had any questions. I reiterated a few points to make sure the participant was comfortable;

- We are not testing you, we are testing the design.

- Please share your thoughts as much as possible while completing each scenario. The more information the better.

- You will be anonymous so please be honest with your thoughts. I will not be offended if you don’t like a design.

Usability Study (25mins.)

3 scenarios provided a general guide as to what I wanted each participant to do within MVP Release and Prototype (5 started with MVP, 5 started with Prototype).

3 Scenarios

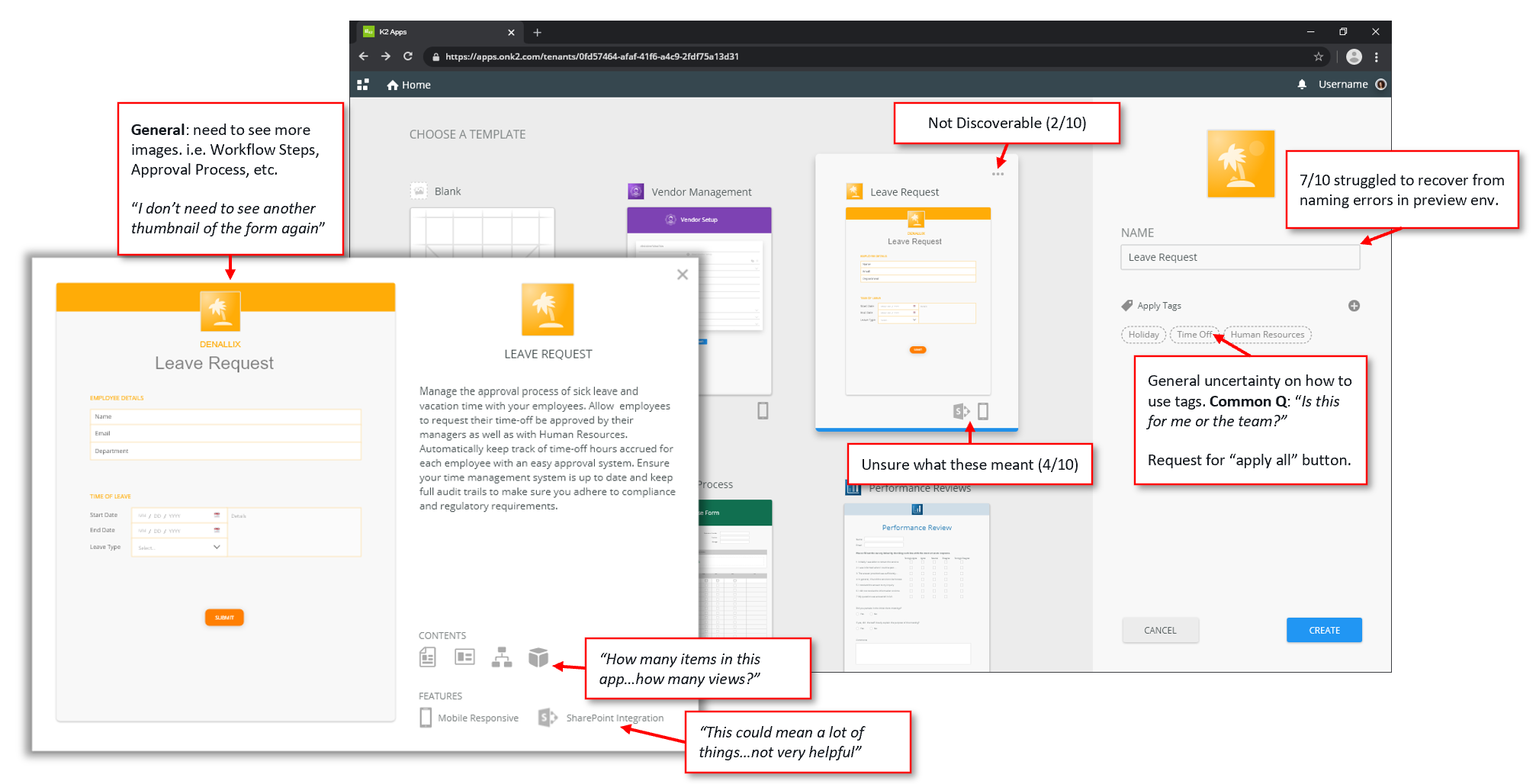

- “The HR department emailed you and said they would like you to create an app that would automate their leave request process.”

- “Jane Austin emailed you and said that she created a new request form that she would like you to use as the default startform for the leave request app.”

- “Your boss messaged you and said she would like you to rename the workflow called, ‘Very Magical Mystery Workflow’ to just ‘Basic Workflow’.”

Tasks I observed and measured within each scenario include.

- User can locate new app button

- User chose ‘leave request’ template over blank

- User able to locate more information about templates

- User can recover from app name errors

- User applied tags to app

- Used filter panel to find file (1st attempt)

- Used right-click to edit file (1st attempt)

- User able to update startform

Semantic Differential Scale

As part of the Usability Study I asked each participant to rate each scenario (6 total ratings) on a scale from 1-10. 1 being very difficult, 10 being very easy.

I allowed time for followup question based on their ratings. I wanted to know why they gave the ratings as low of high. I also wanted to know why if there was a change in rating for the same scenario between MVP and Prototype. This helped to compare answers to determine what features of specific elements made the two environments easier or difficult.

Semi-Structured Interview (20 mins)

After all scenarios were tested and rated, I then conducted a 30 minute interview where I asked a series of questions, including;

- What are the 3 most common issues you have today when building an app?

- Does App Designer currently solve those three issues? If not, why not?

- Would you be willing to update today to the new App Designer? If not, why not?

Many of the participants showed me specific examples in our current product as to how their process is today and the issues they currently have.

Wrap-Up: Q&A (10 mins)

All of the participants had been a part of a preview presentation of our App Designer. I wanted to make sure I had time at the end of the session to allow the user to ask any questions they may have around the App Designer.

By allowing some time for this Q&A session, few of the users went out of their way to mention to our executive team how much they enjoyed the sessions and felt ‘heard’ and ‘included’.

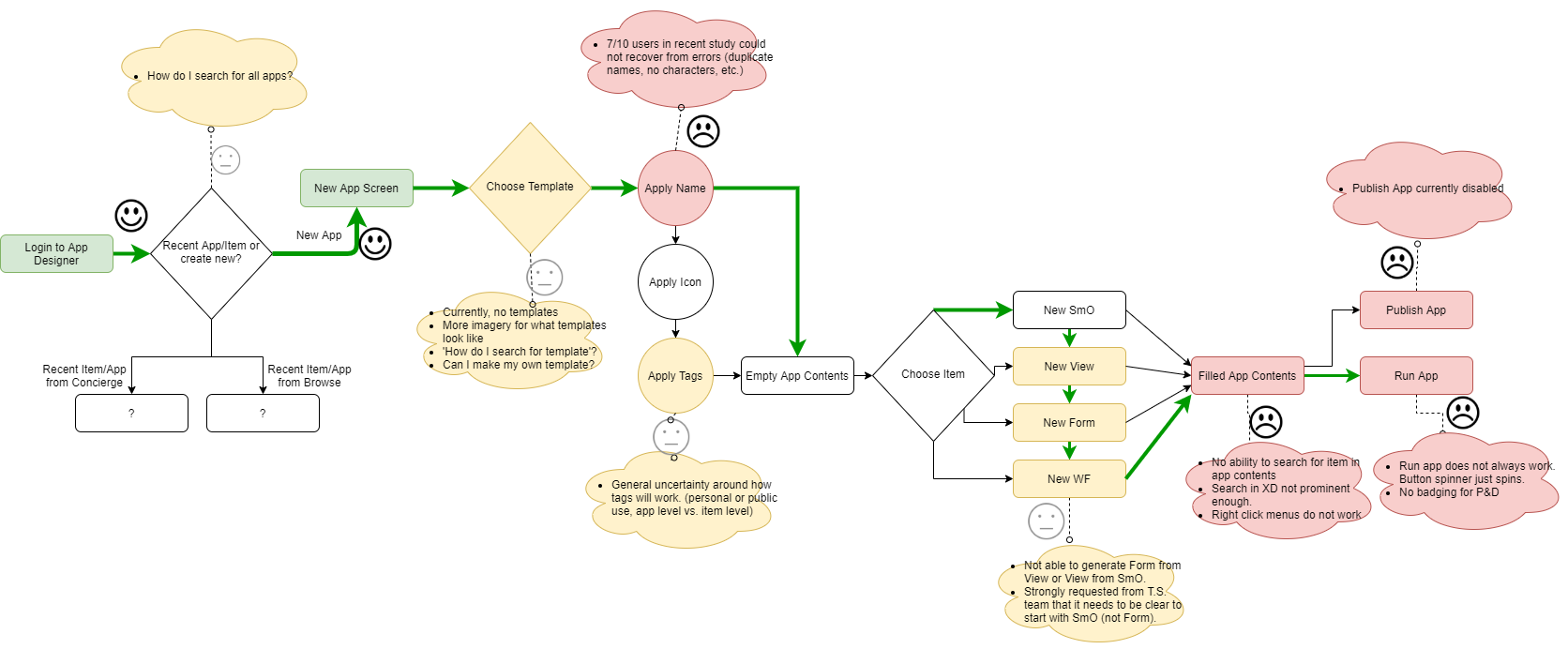

Findings (Analysis)

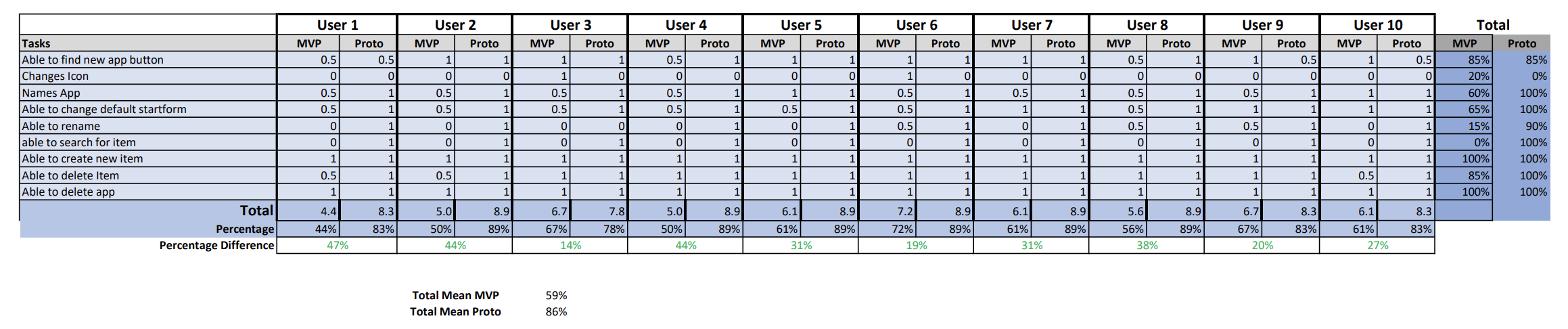

Success Rate

With each task I gave a completion rating of (0= not completed, .5 = partially completed, 1 = completed). There were 20 total points that each participant could get. I used the mean among all participants to find the average percent completion among each individual task and as a whole.

Why mean and not median?

The rating system was structured in a way that minimized skewed results. It is also a way to maintain consistency across all tests for later re-evaluation.

Semantic Differential Scale

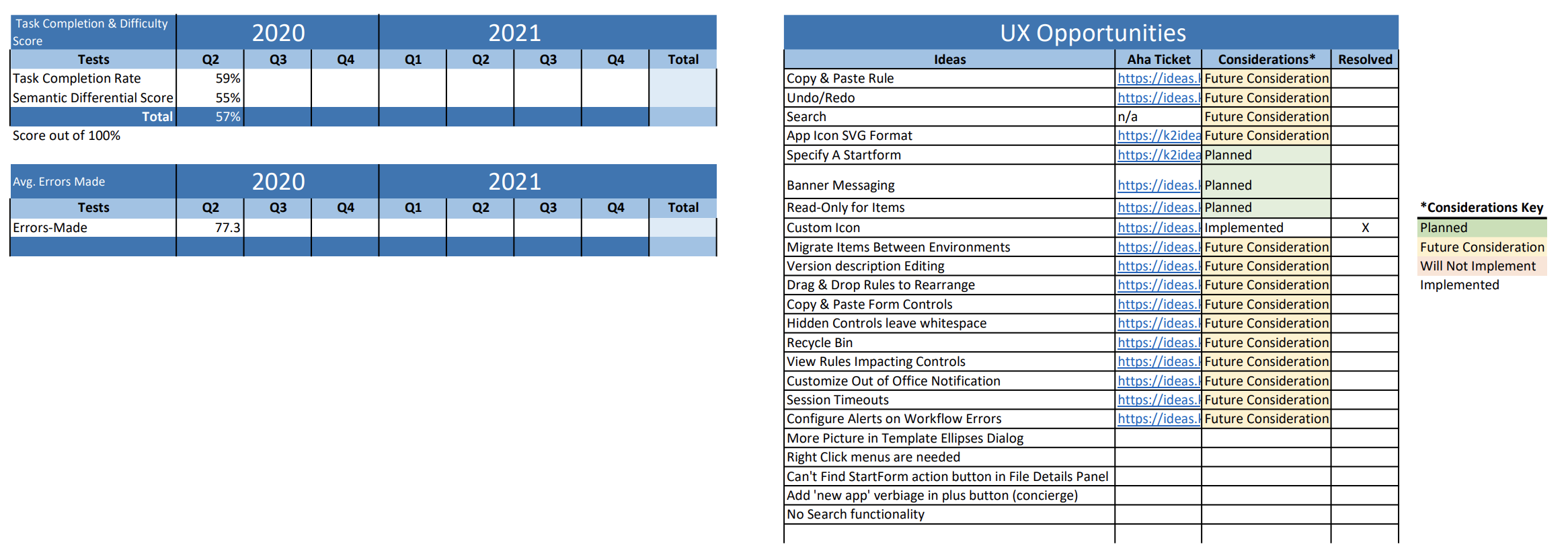

On a scale from 1-10, I totaled the average score for each individual scenario and as a whole. The mean was used as a baseline number that we could use to re-evaluate after future updates.

Click-Stream Analysis

I counted the number of clicks each user made in error as well as any clicks deemed unnecessary (i.e. clicking around to try and find information to complete a task.). Because this test was less structured than other tests and had the potential to have skewed results, I used the median number as the average baseline for measuring efficiency. The median and total number of click can be used to gauge improvements over time with each evaluation.

Additionally, when reviewing the recordings for error clicks, I called out areas where errors were made due to lack of design heuristics. This heuristic analysis was provided in the the final reporting.

Semi-Structured Interview

I used an inductive, latent approach for a thematic analysis. My themes included common issues that users currently have and then I further grouped phases within those groups by positive/negative phrases to determine sentiment value of App Designer.

Conclusion

Where are we currently?

List of Hypotheses

- Users will know how to find and edit items someone else created. (Partially)

- Users will have the features they need to organize multiple items (Yes)

- Users can do basic actions (edit/delete/rename/copy/search) (Partially)

- Templates will provide all the features a user needs to package and deploy. (No)

- App Designer will reduce the time it takes to build an App currently. (No)

- Users have access to all the features they need to publish an app. (No)

- Users will want to update their existing environments to App Designer. (No)

ISO Usability Standards

- Efficiency

- No: Currently, App Designer does not improve speed of tasks or reduce level of effort in building an App. The area App Designer is efficient, is organizing items.

- Effectiveness

- Partially: Current App Designer allows users to do basic functions in order to deploy an app. However, due to a lack of efficiency, being able to execute basic tasks is difficult.

- Satisfaction

- No: Currently, users are not willing to update their environments to the new App Designer. Additionally, Apps Designer does not solve for the majority of a user issues when building an app today.

Are we headed in the right direction?

List of Hypotheses

- Users will know how to find and edit items someone else created. (Yes)

- Users will have the features they need to organize multiple items (Yes)

- Users can do basic actions (edit/delete/rename/copy/search) (Yes)

- Templates will provide all the features a user needs to package and deploy. (No)

- App Designer will reduce the time it takes to build an App currently. (Undecided)

- Users have access to all the features they need to publish an app. (Yes)

- Users will want to update their existing environments to App Designer. (Maybe)

ISO Usability Standards

- Efficiency

- Yes: Many of the features in the prototype will speed up the building process through the use of templates, right-click actions, version control, and dependency views.

- Effectiveness

- Yes: Current App Designer will be able to go beyond basic functions and access most of the features they need to publish an app. Few of the users needed further insight into how running and app and K2 Workspace site will function to determine effectiveness.

- Satisfaction

- Yes: Users were more inclined to switch to Apps Designer if they had all the features provided in the prototype.

Next Steps

Next Steps

There are a list of UI designs that will be updated to improve heuristics on the MVP and prototype interface. I also listed out in the excel report of features that were high priority from users, many already had feature request tickets (in Aha!). I am currently meeting with the Product Manager and development team to story point the list of requests.

Once a feature has been added into the App Designer, I will conduct rapid user tests to gather additional feedback.

Where I Can Improve

- Give more time for users to struggle with the UI. I don’t want the user to feel rushed if they can’t find how to solve a scenario. I also need to find the balance of making sure we don’t spend majority of the time on 1 task.

- I must be careful not to lead the witness within my scenarios. Don’t use exact wording in the scenarios if they are meant to be general. I will need to remember to further define what I want to get out of the test. Is it purely unstructured observations? Or is it more task-based initiatives?

- Do a trial run with someone else (not me) to catch any last minute testing errors. Even dealing with tight time constraints for testing, a trial run is essential.